- URL:https://<geoanalytics-url>/AggregatePoints

- Version Introduced:10.5

Description

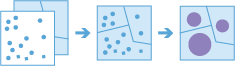

The AggregatePoints operation works with a layer of point features and a layer of areas. The layer of areas can be an input polygon layer or it can be square, hexagonal, or H3 bins calculated when the task is run. The tool first determines which points fall within each specified area. After determining this point-in-area spatial relationship, statistics about all points in the area are calculated and assigned to the area. The most basic statistic is the count of the number of points within the area, but you can get other statistics as well.

For example, suppose you have point features of coffee shop locations and area features of countries, and you want to summarize coffee sales by county. Assuming the coffee shops have a TOTAL_SALES attribute, you can get the sum of all TOTAL_SALES within each county, the minimum or maximum TOTAL_SALES within each county, or other statistics like the count, range, standard deviation, and variance.

This tool can also work on data that is time-enabled. If time is enabled on the input points, then the time slicing options are available. Time slicing allows you to calculate the point-in area relationship while looking at a specific slice in time. For example, you could look at hourly intervals, which would result in outputs for each hour.

For an example with time, suppose you had point features of every transaction made at a coffee shop location and no area layer. The data has been recorded over a year, and each transaction has a location and a time stamp. Assuming each transaction has a TOTAL_SALES attribute, you can get the sum of all TOTAL_SALES within the space and time of interest. If these transactions are for a single city, we could generate areas that are one kilometer grids, and look at weekly time slices to summarize the transactions in both time and space.

Request parameters

| Parameter | Details |

|---|---|

| pointLayer (Required) | The point features that will be aggregated into the polygons in the polygonLayer or bins of the specified binSize. Syntax: As described in Feature input, this parameter can be one of the following:

REST examples |

| binType (Optional) | The type of bin that will be generated and into which points will be aggregated. When generating bins for Square, the number and units specified determine the height and length of the square. For Hexagon, the number and units specified determine the distance between parallel sides. Analysis using Square or Hexagon bins requires a projected coordinate system. At 10.6.1, if a projected coordinate system is not specified when running analysis, the World Cylindrical Equal Area (WKID 54034) projection will be used. At 10.7 or later, if a projected coordinate system is not specified when running analysis, a projection will be picked based on the extent of the data. Analysis using H3 bins is supported at 11.2 or later and requires World Geodetic System 1984 (WKID 4326). If a different coordinate system is specified when running analysis with H3 bins, the input points will automatically be transformed into World Geodetic System 1984. Values: H3 | Hexagon | Square (default) Note:Either binType or polygonLayer must be specified. If binType is chosen, binSize and binSizeUnit must be included. If binType is H3, binResolution can be used instead of binSize and binSizeUnit.REST Examples |

| binSize (Required if binType is used and binResolution is not used) | The distance for the bins of type binType that the pointLayer will be aggregated into. When generating bins for Square the number and units specified determine the height and length of the square. For Hexagon, the number and units specified determine the distance between parallel sides. For H3, an H3 resolution will be chosen that produces bins with a distance between parallel sides closest to the number and units specified. REST Examples |

| binSizeUnit (Required if binSize is used) | The distance unit for the bins that the pointLayer will be aggregated into. Values: Meters | Kilometers | Feet | FeetInt | FeetUS | Miles | MilesInt | MilesUS | NauticalMiles | NauticalMilesInt | NauticalMilesUS | Yards | YardsInt | YardsUS REST Examples |

| binResolution (Required if binType is H3 and binSize is not used) | This parameter is available at ArcGIS GeoAnalytics Server 11.2 or later. The resolution of H3 bins that the pointLayer will be aggregated into. This value is only used when binType is H3 and binSize is not specified. Choose a resolution between 0 and 15, where 0 produces the largest bins and 15 produces the smallest bins. REST Examples |

| polygonLayer (Required if binType is not used) | The polygon features (areas) into which the input points will be aggregated. This operation is not required if binSize and binSizeUnit have been defined. Syntax: As described in Feature input, this parameter can be one of the following:

REST examples |

| timeStepInterval (Optional) | A numeric value that specifies duration of the time step interval. The default is none. This option is only available if the input points are time enabled and represent an instant in time. REST Example |

| timeStepIntervalUnit (Optional) | A string that specifies units of the time step interval. The default is none. This option is only available if the input points are time enabled and represent an instant in time. REST Examples |

| timeStepRepeatInterval (Optional) | A numeric value that specifies how often the time step repeat occurs. The default is none. This option is only available if the input points are time enabled and of time type instant. REST Examples |

| timeStepRepeatIntervalUnit (Optional) | A string that specifies the temporal unit of the step repeat. The default is none. This option is only available if the input points are time enabled and of time type instant. REST Examples |

| timeStepReference (Optional) | A date that specifies the reference time to align the time slices to, represented in milliseconds from epoch. The default is January 1, 1970, at 12:00 a.m. (epoch time stamp 0). This option is only available if the input points are time enabled and of time type instant. REST Examples |

| summaryFields (Optional) | A list of field names and statistical summary types you want to calculate. Note that the count is always returned. By default, all statistics are returned. Note that the count of points within each polygon is always returned. onStatisticField specifies the name of the fields in the target layer. statisticType is one of the following for numeric fields:

REST Examples |

| outputName (Required) | The task will create a feature service of the results. You define the name of the service. REST Examples |

| context (Optional) | The context parameter contains additional settings that affect task execution. For this task, there are four settings:

|

| f | The response format. The default response format is html. Values: html | json |

Example usage

Below is a sample request URL for AggregatePoints:

https://webadaptor.domain.com/server/rest/services/System/GeoAnalyticsTools/GPServer/AggregatePoints/submitJob?pointLayer={"url":"https://webadaptor.domain.com/server/rest/services/Hurricane/hurricaneTrack/0"}&binType=Square&binSize=100&binSizeUnit=Meters&polygonLayer={}&timeStepInterval=20&timeStepIntervalUnit=Minutes&timeStepRepeatInterval=1&timeStepRepeatIntervalUnit=Days&timeStepReference=946684800000&summaryFields=[{"statisticType": "Mean","onStatisticField":"Annual_Sales"},{"statisticType":"Sum","onStatisticField":"Annual_Sales"}]&outputName=myOutput&context={"extent":{"xmin":-122.68,"ymin":45.53,"xmax":-122.45,"ymax":45.6,"spatialReference":{"wkid":4326}}&f=jsonResponse

When you submit a request, the service assigns a unique job ID for the transaction.

{

"jobId": "<unique job identifier>",

"jobStatus": "<job status>"

}After the initial request is submitted, you can use jobId to periodically check the status of the job and messages as described in Check job status. Once the job has successfully completed, use jobId to retrieve the results. To track the status, you can make a request of the following form:

https://<analysis url>/AggregatePoints/jobs/<jobId>Accessing results

When the status of the job request is esriJobSucceeded, you can access the results of the analysis by making a request of the following form:

https://<analysis-url>/AggregatePoints/jobs/<jobId>/results/output?token=<your token>&f=json| Response | Description |

|---|---|

| output |

output will always contain polygon features. The number of resulting polygons is based on the location of inputPoints. The layer will inherit all the attributes of the input polygon layer; will have a Count attribute, which is the number of points that are enclosed by the polygon; and by default, will calculate all statistics for each field in the pointsLayer. If a summaryFields parameter is specified in the task request, the layer will compute the anything specified in the summaryFields. For example, if you had requested: the resulting polygon features would have two attributes, Count and mean_Annual_Sales, to contain the calculated values. The result has properties for parameter name, data type, and value. The contents of value depend on the outputName parameter provided in the initial request. The value contains the URL of the feature service layer. See Feature output for more information about how the result layer is accessed. |